这是一个创建于 1547 天前的主题,其中的信息可能已经有所发展或是发生改变。

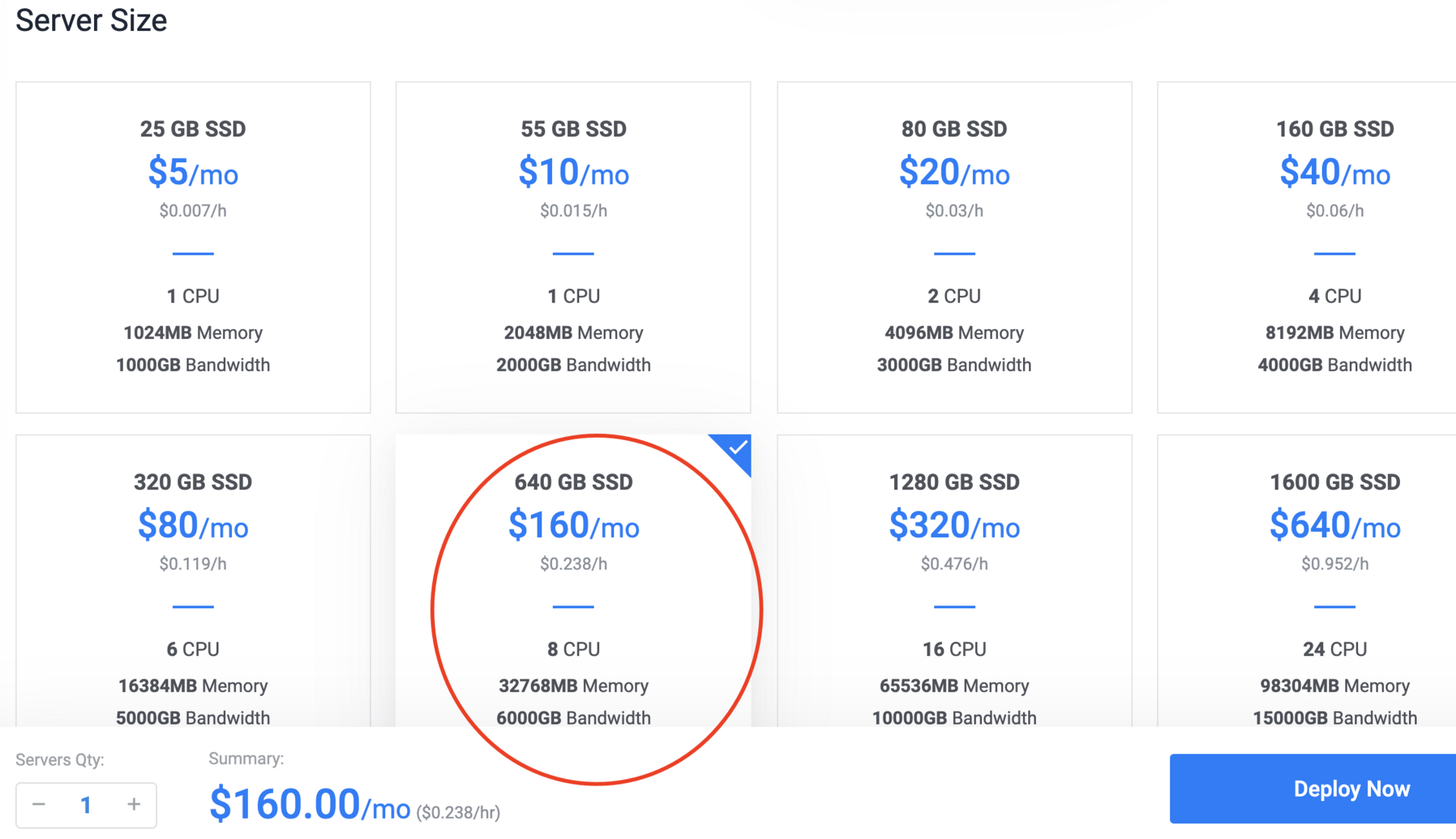

之前的一个帖子 单表 13 亿记录创建索引需要多长时间? 测试了在一台 8C32G 服务器给一列加索引大概需要 24 分钟,由于插入脚本没有优化,花费了大概 2 个小时左右。

最近研究了一下,还是 8C32G 服务器,最快的插入大概多久?先上结论:10 分钟。

步骤:

建表:

CREATE unlogged TABLE "test" (

"id" SERIAL PRIMARY KEY NOT NULL,

"ip" integer NOT NULL,

"domain" varchar DEFAULT 'drawerd.com'

);

再生成一个 12.8 亿条记录的 csv,大概 41G

f = File.open("ii.csv", "w")

(1..1280000000).each do |x|

f.puts [x, Random.random_number(1000000000), "#{x}.com"].join(",")

end

f.close

csv 概览:

tail -fn20 ii.csv

1279999981,379768240,1279999981.com

1279999982,440776589,1279999982.com

1279999983,194045965,1279999983.com

1279999984,643339201,1279999984.com

1279999985,397295532,1279999985.com

1279999986,308045177,1279999986.com

1279999987,860093304,1279999987.com

1279999988,557636470,1279999988.com

1279999989,882497774,1279999989.com

1279999990,987416658,1279999990.com

1279999991,728315013,1279999991.com

1279999992,163951092,1279999992.com

1279999993,524652,1279999993.com

1279999994,871673632,1279999994.com

1279999995,833545894,1279999995.com

1279999996,635775438,1279999996.com

1279999997,19686670,1279999997.com

1279999998,243310061,1279999998.com

1279999999,706814112,1279999999.com

1280000000,701386384,1280000000.com

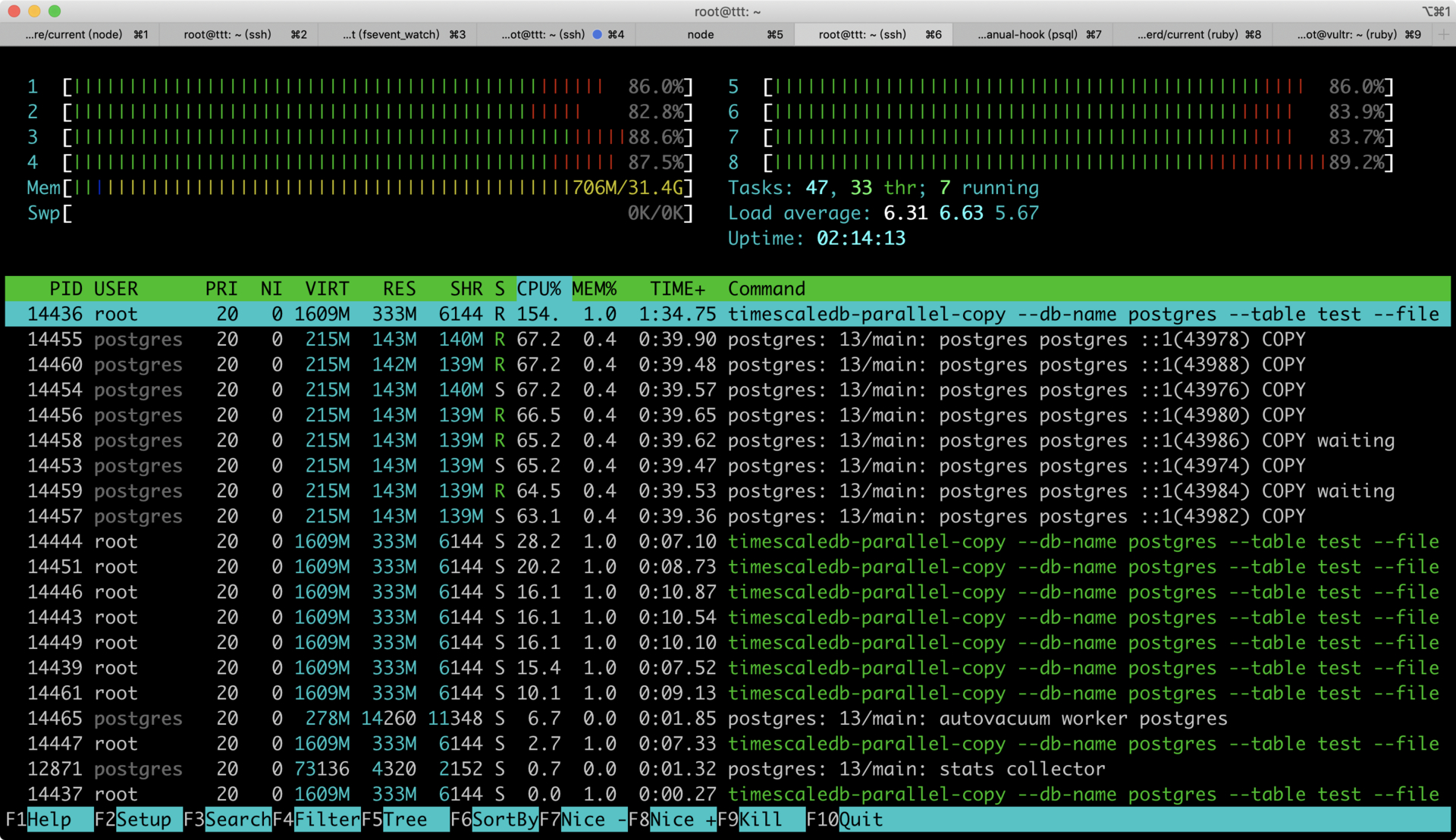

使用timesccaledb-parallel-copy,开 8 个进程,batch-size 设置为 10w,并发 copy 。之前以为 timesccaledb-parallel-copy 只能 timescaledb 用,现在测试了一下,纯 pg 也可以用。

timescaledb-parallel-copy --db-name postgres --table test --file ./ii.csv --workers 8 --reporting-period 30s -connection "host=localhost user=postgres password=helloworld sslmode=disable" -truncate -batch-size 100000

如果单纯使用 PG 的 copy 的话,只是利用单进程,使用 imescaledb-parallel-copy 会多进程同时 copy,从图上可以看到 CPU 几乎被跑满。可以达到每秒 200w 的写入。如果是 64 核的机器,只要不到两分钟,神不神奇...

timescaledb-parallel-copy --db-name postgres --table test --file ./ii.csv --workers 8 --reporting-period 30s -connection "host=localhost user=postgres password=helloworld sslmode=disable" -truncate -batch-size 100000

at 30s, row rate 2049993.13/sec (period), row rate 2049993.13/sec (overall), 6.150000E+07 total rows

at 1m0s, row rate 1913075.25/sec (period), row rate 1981529.69/sec (overall), 1.189000E+08 total rows

at 1m30s, row rate 1930261.28/sec (period), row rate 1964442.55/sec (overall), 1.768000E+08 total rows

at 2m0s, row rate 1943332.35/sec (period), row rate 1959165.00/sec (overall), 2.351000E+08 total rows

at 2m30s, row rate 1966667.61/sec (period), row rate 1960665.52/sec (overall), 2.941000E+08 total rows

at 3m0s, row rate 1919998.29/sec (period), row rate 1953887.65/sec (overall), 3.517000E+08 total rows

at 3m30s, row rate 1950001.96/sec (period), row rate 1953332.55/sec (overall), 4.102000E+08 total rows

at 4m0s, row rate 1949999.02/sec (period), row rate 1952915.86/sec (overall), 4.687000E+08 total rows

at 4m30s, row rate 1929999.19/sec (period), row rate 1950369.56/sec (overall), 5.266000E+08 total rows

at 5m0s, row rate 1873333.69/sec (period), row rate 1942665.98/sec (overall), 5.828000E+08 total rows

at 5m30s, row rate 1913172.63/sec (period), row rate 1939984.56/sec (overall), 6.402000E+08 total rows

at 6m0s, row rate 1843488.31/sec (period), row rate 1931943.89/sec (overall), 6.955000E+08 total rows

at 6m30s, row rate 1816666.49/sec (period), row rate 1923076.40/sec (overall), 7.500000E+08 total rows

at 7m0s, row rate 1962938.14/sec (period), row rate 1925924.19/sec (overall), 8.089000E+08 total rows

at 7m30s, row rate 1950394.13/sec (period), row rate 1927555.19/sec (overall), 8.674000E+08 total rows

at 8m0s, row rate 1963333.08/sec (period), row rate 1929791.31/sec (overall), 9.263000E+08 total rows

at 8m30s, row rate 1963333.49/sec (period), row rate 1931764.38/sec (overall), 9.852000E+08 total rows

at 9m0s, row rate 1966666.04/sec (period), row rate 1933703.36/sec (overall), 1.044200E+09 total rows

at 9m30s, row rate 1963333.35/sec (period), row rate 1935262.83/sec (overall), 1.103100E+09 total rows

at 10m0s, row rate 1949999.36/sec (period), row rate 1935999.66/sec (overall), 1.161600E+09 total rows

at 10m30s, row rate 1966667.45/sec (period), row rate 1937460.03/sec (overall), 1.220600E+09 total rows

at 11m0s, row rate 1933333.38/sec (period), row rate 1937272.46/sec (overall), 1.278600E+09 total rows

COPY 1280000000

1

ferock 2020-10-10 09:34:10 +08:00

学习了,感谢

|

2

mazyi 2020-10-10 09:58:33 +08:00

主要还是 ssd 厉害

|

4

sunziren 2020-10-10 10:12:50 +08:00

six ! six ! six !

|

5

Numbcoder 2020-10-10 10:28:21 +08:00

炮哥厉害!

|

6

opengps 2020-10-10 10:35:40 +08:00

这个测试的意义并不大,与其说是测试写入 io,倒不如说是在测试硬盘写入速度(直接总数据大小除以硬盘平均写入速度)

现实里的这种规模的表: 1,往往不会是自增 id (因为自增有极限值),而是选用时间戳作为聚集索引,写入时候需要考虑维护索引的开销 2,很难使同样的块大小,现实中的大量数据,往往不采用等长大小存储 3,ssd 硬盘的性能,可能有不同的实现方式,比如虚拟机下的 ssd,只能约等于普通物理机械硬盘的 io |

7

chihiro2014 2020-10-10 10:59:05 +08:00

对于这种,磁盘 IO 就是瓶颈了吧= =,内存型应该会更快

|

8

hooopo OP |

10

leafre 2020-10-10 11:17:04 +08:00

哈哈哈

|

11

opengps 2020-10-10 11:32:33 +08:00

|

12

hooopo OP @opengps 别扯分布式 snowflake uuid 就是 bigint 的 分布式么?你自己用 int4 爆表了是连范围都不清楚怪谁?

|

14

030 2020-10-10 11:49:09 +08:00

这就是跑分仔?有鲁大师评分吗

|

15

hooopo OP @030 啥叫跑分?你在 v 站上搜一下 看看其他人 mongo mysql pg 插入的速度 你就知道 10 分钟 13 亿是什么水平

|

16

chihiro2014 2020-10-10 11:53:40 +08:00 @opengps pg 中的那个叫做 clustered index,叫法不同罢了

|

18

liprais 2020-10-10 11:57:53 +08:00

“pg 我并不熟悉,我是基于我对关系型数据库应用的推断”

笑掉大牙 |

19

hooopo OP @liprais 这哥们能自己用 int4 主键存日志爆了表,然后来这里指导不能用有极值的类型,估计没跑爆表之前不知道 int4 范围,也是醉了

|

21

aec4d 2020-10-10 12:22:33 +08:00 via iPhone

插进去了查询也是个问题....... 我选择用 clickhouse

|

24

yushiro 2020-10-10 12:33:45 +08:00 via iPhone

这个……不就是对应 mssql 的 bcp 方式导入嘛,buck copy 肯定比单条 insert 快。

大概 10 多年前就这样来准备测试数据了。 当时的机器,单线程 insert 速度在 1000 条 /s 用 bcp 的方式导入,快 2 个数量级。 |

25

namelosw 2020-10-10 12:47:46 +08:00 via iPhone

这么大插入是不是可以考虑 Cassandra 或者 Kafka 风格的那种顺序读写,异步索引的方案了?

|

26

hooopo OP @yushiro bcp 只是生成了批量 insert 语句吧,怎么都不如 pg 的 copy 快:https://programmer.help/blogs/data-migration-import-and-export-tests-between-postgresql-and-mssql-sql-server.html

|

32

yanfany 2020-10-10 13:43:18 +08:00

一个纯技术交流的帖都能吵起来。。。v 站的讨论氛围恶化

|

34

shyling 2020-10-10 14:29:13 +08:00

原问题是 mysql 吧,这都换了几个数据库了=。=

不知道换 rocksdb 这种基于 lsm 的写入是个什么速度 |

36

yushiro 2020-10-10 19:05:01 +08:00 via iPhone

@hooopo 不,bcp 是导入导出 mssql 的 cli,数据需要事先生成的,跟你写的类似,先准备 csv 文件。

我觉得此题的要点就是,你所熟悉的 dbms 系统,有没有相应的 buck copy 实现,以及你是否熟悉这种用法。 |

37

kingsmalltwo 2020-10-10 20:01:37 +08:00

有一个思路,就是直接写数据库表对应的文件,然后重启服务加载。

|

38

yintianwen 2021-03-09 14:47:34 +08:00

PG 参数有什么需要优化的?

|

39

hooopo OP @yintianwen 没有优化 这个要按实际应用类型来吧

|